We have all been there, when developing a J2EE application, the environment nightmares. When your stuff goes through the different stages of integration, acceptance, production etc. Stuff just breaksTM, because of misconfiguration. Configuration which you have no control over.

Missing a database table here, a JNDI binding forgotten, a URL not reachable, weird classloading nightmares, because another jar is being used in acceptance.

Here is where SelfDiagnose comes to the rescue. Somehow this little gem gets no press whatsoever. Lately some new tasks have been added to the mix. This blog by Ernest explains something about compile-time dependencies. But more interestingly (to me), SelfDiagnose now contains an CheckAtgComponentProperty task and a CheckEndecaService.

The CheckAtgComponentProperty lets you check an ATG property. I know this can be done with ATG’s component browser as well, but hold on.

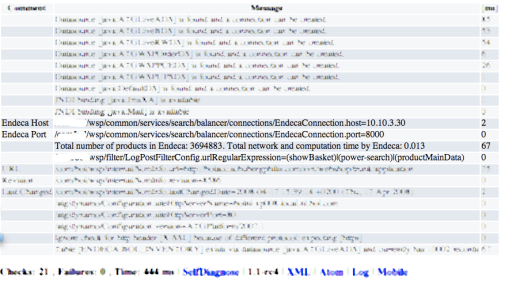

The CheckEndecaService will check the availability of the Endeca service.

The combination of these tasks and the chaining of these creates a powerful diagnosis. See the following snippet of code, where first an ATG property is queried which then is chained to the Endeca task. Another nifty SelfDiagnose feature.

This code is heavily customer oriented, but you will get the idea.

1 <checkatgcomponentproperty

2 component="/wsp/common/services/search/balancer/connections/EndecaConnection"

3 property="host"

4 comment="Endeca Host"

5 var="eneHost"/>

6 <checkatgcomponentproperty

7 component="/wsp/common/services/search/balancer/connections/EndecaConnection"

8 property="port"

9 comment="Endeca Port"

10 var="enePort"/>

11 <checkendecaservice host="${eneHost}" port="${enePort}" query="N=0"/>

The real cool and not so well understood part about SelfDiagnose in my opinion, is that it will check a bunch of tasks from inside the environment you are executing. This means that the above example will output the ATG configuration and check the configured Endeca instance of the actual environment.

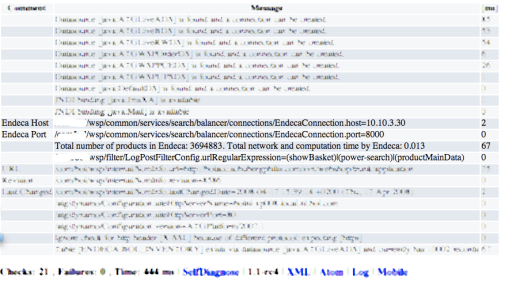

Hitting the selfdiagnose.html url will show:

I just mentioned the ATG and Endeca tasks, but there is a lot more which can be extremely helpful.

This nifty feature can save some energy when something is misconfigured. Checking the selfdiagnose URL can save a lot of time.

Posted by Ronald Pulleman

Posted by Ronald Pulleman